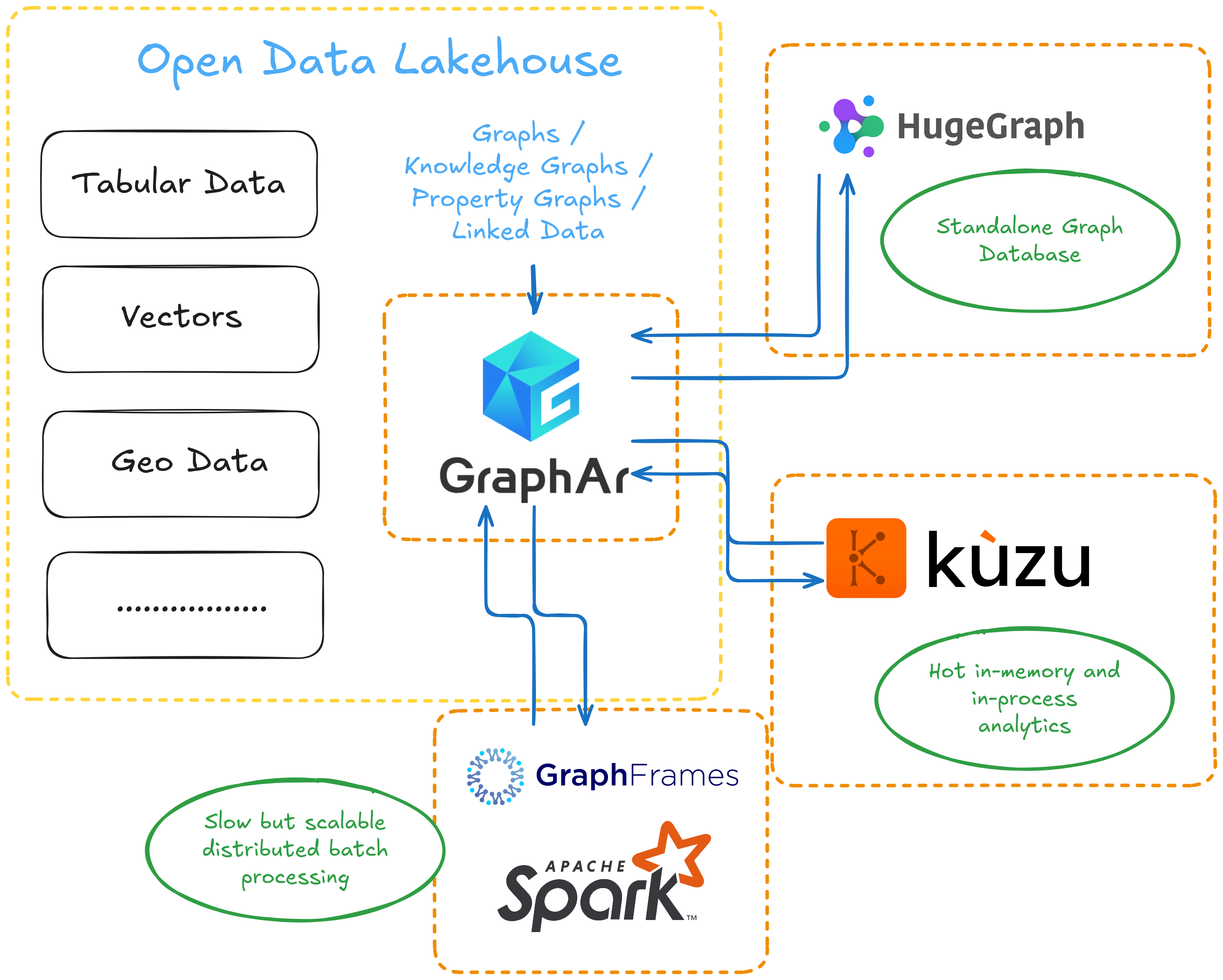

Graph Embeddings at scale with Spark and GraphFrames

In this blog post, I will recount my experience working on the addition of the graph embeddings API to the GraphFrames library. I will start with a top-level overview of the vertex representation learning task and the existing approaches. Then, I will focus on generating embeddings from random walks. I will explain the intuition behind and the implementation details of Random Walks in GraphFrames, as well as the chosen trade-offs and limitations. Given a sequence of graph vertices, I will explain the possible ways to learn vertex representations from it. First, I will analyze the common approach of using the word2vec model and its limitations, especially the limitations of the Apache Spark ML Word2vec implementation. I will present the results of my analysis of alternative approaches and their respective advantages and disadvantages. Finally, I will explain the intuition behind the Hash2vec approach that I chose as a starting point for GraphFrames, as well as the details of its implementation in Scala and Spark. Finally, I will present an experimental comparison of Hash2vec and Word2vec embeddings and share my thoughts about future directions.