Spark-Connect: I'm starting to love it!

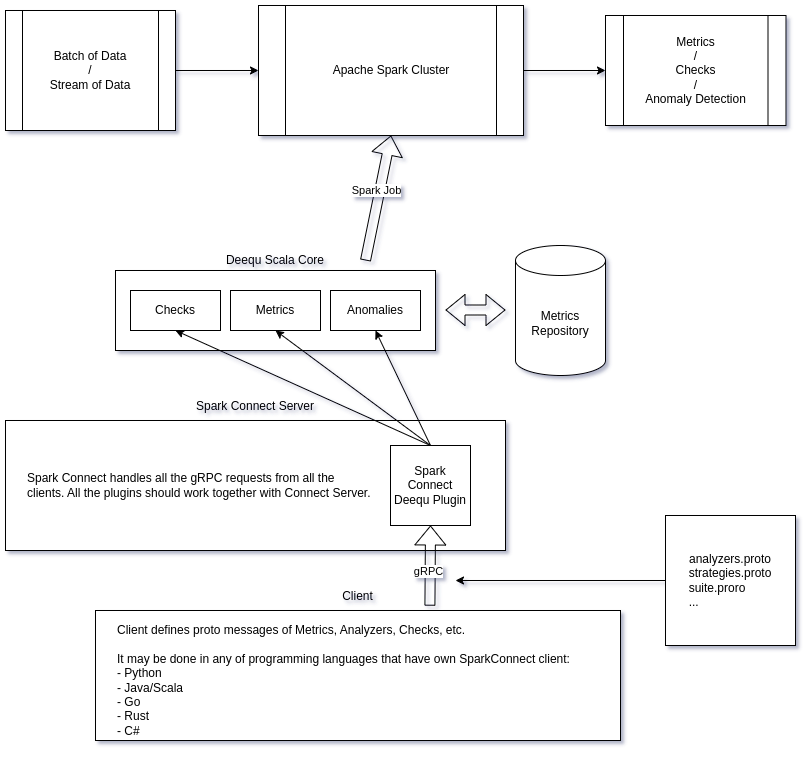

Summary This blog post is a detailed story about how I ported a popular data quality framework, AWS Deequ, to Spark-Connect. Deequ is a very cool, reliable and scalable framework that allows to compute a lot of metrics, checks and anomaly detection suites on the data using Apache Spark cluster. But the Deequ core is a Scala library that uses a lot of low-level Apache Spark APIs for better performance, so it cannot be run directly on any of Spark-Connect environment. To solve this problem, I defined protobuf messages for all main structures of Deequ, like Check, Analyzer, AnomalyDetectionStrategy, etc., wrote a helper object that can re-create Deequ structures from the corresponding protobuf, and finally made a Spark-Connect native plugin that can process Deequ specific messages, construct DQ suits from them, compute the report, and return the result to the Spark-Connect client. I tested my solution with PySpark Connect 3.5.1, but it should work with any of the existing Spark-Connect clients (Spark-Connect Java/Scala, Spark-Connect Go, Spark-Connect Rust, Spark-Connect C#, etc). ...